- in Production by Bobby Owsinski

- |

- 2 comments

How Ai Music Generation Works

When I was writing my latest book, The Musician’s Ai Handbook, one question that I received quite often was one that I had taken somewhat for granted. “How does Ai music generation actually make music?” came up repeatedly, so I did some research and it ended up as a section of the book. Here’s the excerpt.

“Let’s delve into this because the topic is not only fascinating, but highlights some of Ai music’s limitations as well.

Like other Ai’s, a music generator’s Neural Network has to be trained. This is done by using a dataset of songs, like you would expect. These could come from either scraping existing music, which could be a vast collection of songs in a particular genre or style. It could also come from licensed music from a popular artist or producer, as is more recently the case with newer music Ai’s.

The algorithm examines the patterns and structures in the music using an audio spectrogram (see Figure 1.6), and then examines the patterns and structures in the music such as the chords, melodies, beats, rhythms, and instrumentation. It then uses this information to create new music that is similar in style and structure to the training material.

The thing to remember is that all this requires a large amount of computer horsepower either based in the cloud or from your computer. The limitations begin to emerge when it comes to generating music.

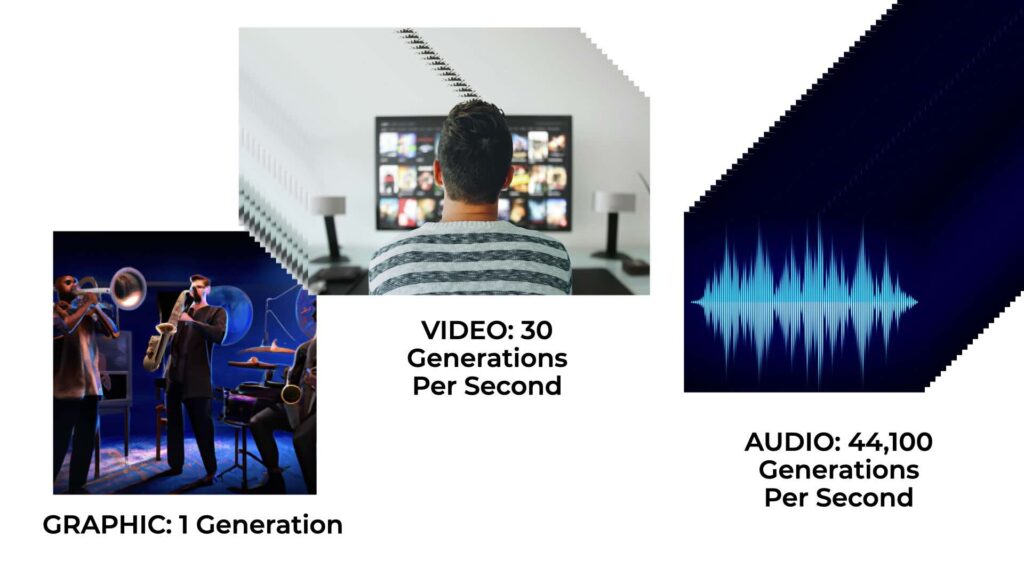

When you ask graphics Ai to generate an image, it only has to do it once. Sure it knows what colors to use, how to bend the curves and adjust lighting and shadows, but it adjusts all these parameters just one time to create your image.

For a video, the Ai now needs to generate an image 30 times a second, and for gaming the frequency typically reaches 60 times a second. This puts more strain on an Ai’s system, but it usually has no trouble handling it except for the wait time to generate the result.

The complexity amplifies considerably when it comes to music though, as a music Ai typically generates music anywhere from 8,000 to 44,100 times a second (see the image above)! Since operating at this pace is so taxing, it usually begins to throw away frequency data in the same manner as MP3 encoding which is referred to as frequency masking.

This means that the audio resolution of most Ai generated songs is just not that good – certainly not up to professional standards. It’s possible that a full 44.1kHz/16 bit CD-quality file can be generated, but you usually have to pay a subscription premium for that to happen.

If you require an audio resolution higher than 44.1/16 (most record labels now require 96kHz/24 bit mix files in their delivery specs), then your best approach is to download the MIDI file (which is usually a free option on most music Ai’s), import it into your DAW, then use virtual instruments to generate the sounds you want at a higher resolution (we’ll go over this more in depth in Chapter 3).

To finish up the question of “How does an Ai music generation actually make music?”, the music generator doesn’t use a voice chip or oscillators like in a synthesizer to make its sounds. It’s just a stream of 1’s and 0’s that it sends to your computer’s audio interface digital-to-analog convertor, the same as playing back any audio from your computer.”

You can find out more about The Musician’s Ai Handbook here.